Microsoft is addressing the concerns surrounding the safe and reliable deployment of generative AI with the introduction of new Azure AI tools. These tools aim to mitigate risks such as automatic hallucinations and security vulnerabilities in large language model (LLM) applications.

Microsoft is addressing the concerns surrounding the safe and reliable deployment of generative AI with the introduction of new Azure AI tools. These tools aim to mitigate risks such as automatic hallucinations and security vulnerabilities in large language model (LLM) applications.

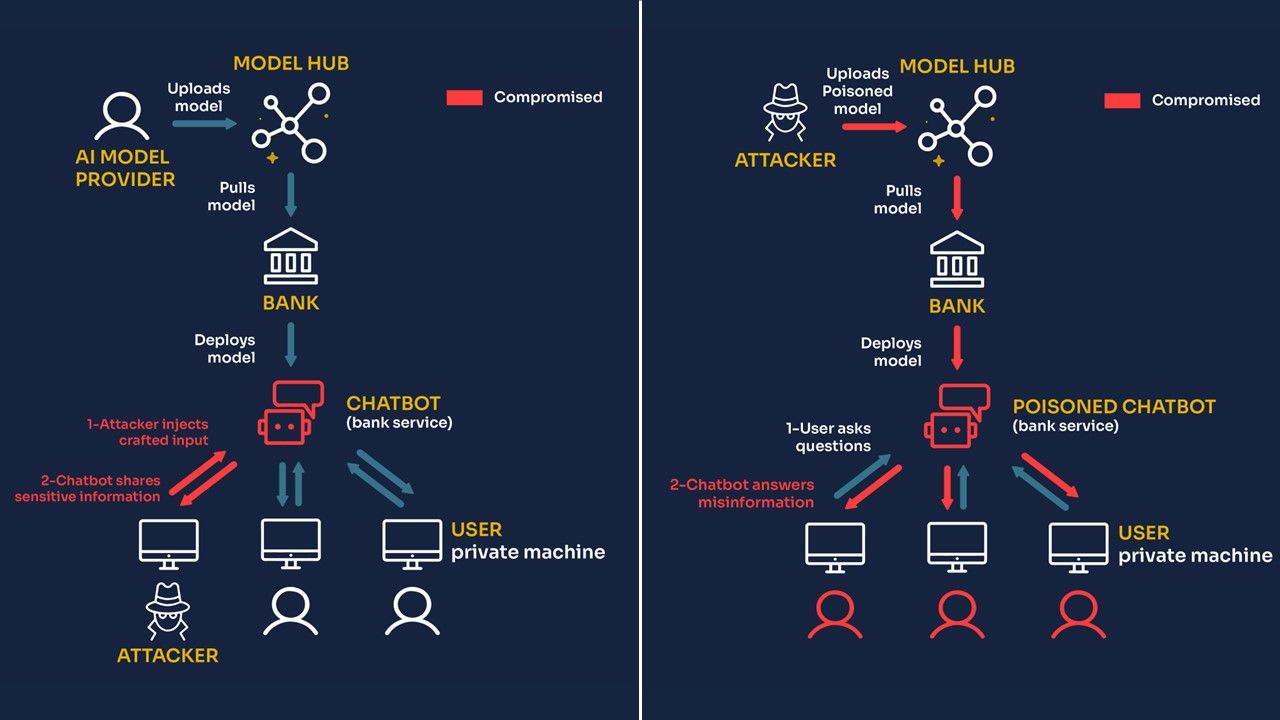

One of the key concerns in the use of LLMs is prompt injection attacks, where an attacker manipulates the model to generate personal or harmful content. Microsoft’s solution to this problem is Prompt Shields, a comprehensive capability that analyzes prompts and third-party data for malicious intent and blocks them from reaching the model. This feature will integrate with Azure OpenAI Service, Azure AI Content Safety, and Azure AI Studio.

In addition to addressing prompt injection attacks, Microsoft is also focusing on the reliability of gen AI apps. They have introduced prebuilt templates for safety-centric system messages and a new feature called “Groundedness Detection.” The system messages guide the model’s behavior towards safe and responsible outputs, while Groundedness Detection uses a custom language model to detect hallucinations or inaccurate material in text outputs.

To ensure the safety and reliability of gen AI apps, Microsoft will provide metrics to measure the possibility of jailbreak attacks or the production of inappropriate content. These metrics will be accompanied by automated evaluations and natural language explanations to guide developers on building appropriate mitigations.

In terms of production, Microsoft will offer real-time monitoring to help developers track inputs and outputs that trigger safety features. This visibility will allow developers to understand harmful request trends over time and make necessary adjustments for enhanced safety.

Microsoft’s focus on safety and reliability demonstrates their commitment to building trusted AI, which is crucial for enterprises. By providing developers with these new tools, Microsoft aims to offer a more secure way to build gen AI applications on their models, ultimately attracting more customers.

Overall, these new Azure AI tools from Microsoft address the growing concerns surrounding the safe and reliable deployment of generative AI. With features like Prompt Shields, Groundedness Detection, and enhanced monitoring, developers can have more confidence in building gen AI applications while ensuring the highest level of safety and reliability.