The rapid progress of large language models (LLMs) has been a game-changer in the field of artificial intelligence. However, recent developments suggest that the pace of improvement may be slowing down. OpenAI’s releases, from GPT-3 to GPT-4o, have shown diminishing progress in terms of power and range with each generation. Other LLMs, such as Claude 3 and Gemini Ultra, seem to be converging around similar benchmarks as well.

The rapid progress of large language models (LLMs) has been a game-changer in the field of artificial intelligence. However, recent developments suggest that the pace of improvement may be slowing down. OpenAI’s releases, from GPT-3 to GPT-4o, have shown diminishing progress in terms of power and range with each generation. Other LLMs, such as Claude 3 and Gemini Ultra, seem to be converging around similar benchmarks as well.

The future implications of this trend are significant. One possible outcome is that developers will focus on specialization, creating more AI agents that serve narrow use cases and specific user communities. This acknowledges the fact that having one system that can handle everything is not realistic. Additionally, the dominant user interface may shift from chatbots to formats with more guardrails and restrictions to enhance the user experience. For example, an AI system that scans a document and offers suggestions.

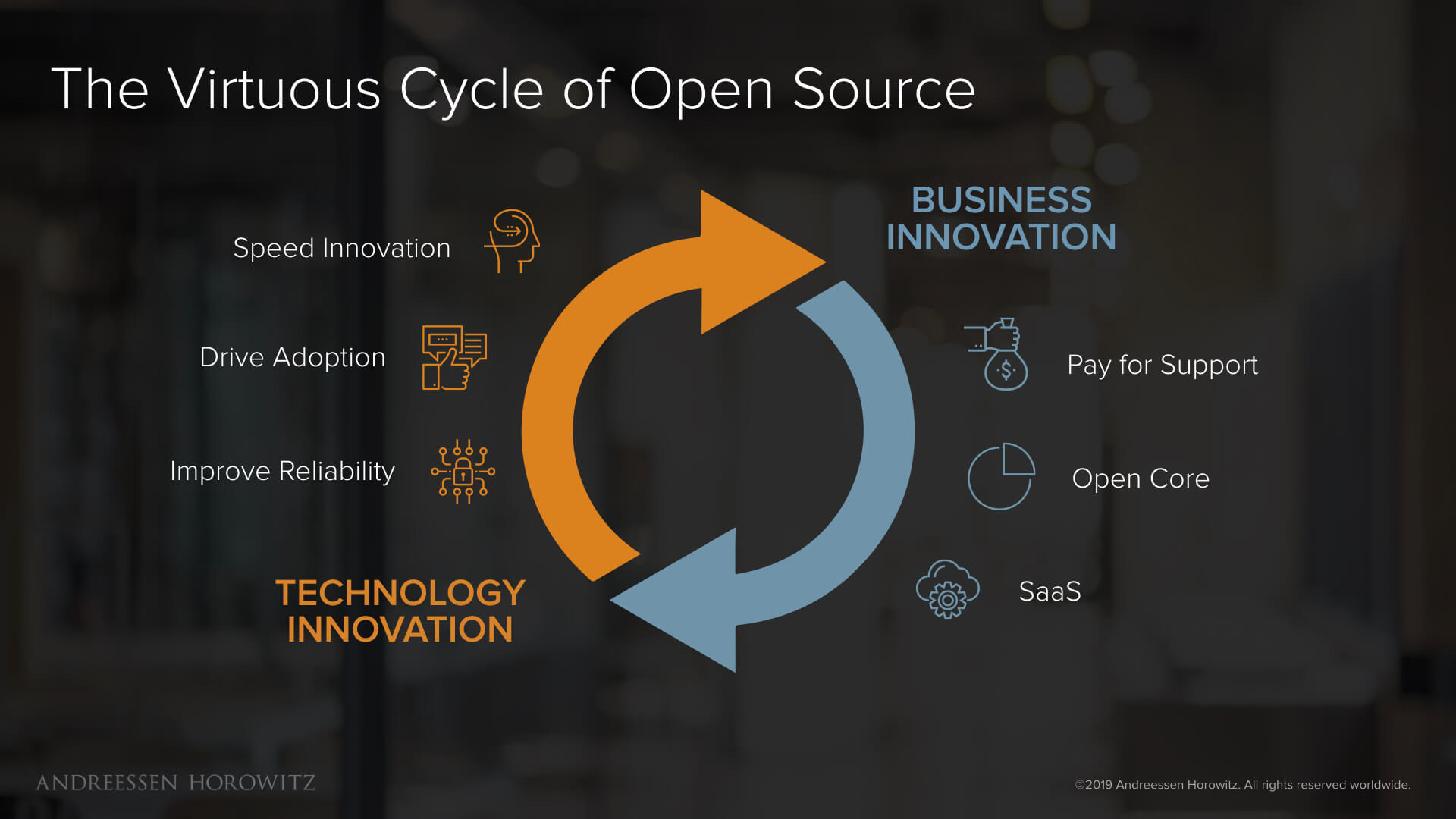

Open source LLMs may also play a more prominent role if the major players no longer produce substantial advances. While developing LLMs is costly, open source providers like Mistral and Llama could hold their own by focusing on features, ease of use, and multi-modal capabilities.

The availability of training data is another crucial factor. As LLMs approach the limits of public text-based data, they will need to explore other sources such as images and videos. This shift could lead to improvements in handling non-text inputs and a deeper understanding of queries.

Furthermore, the emergence of new LLM architectures is a possibility. Transformer architectures have dominated the field, but other models like Mamba have shown promise. If progress with transformer LLMs slows down, there could be increased interest in exploring alternative architectures.

It is important for developers, designers, and architects working in AI to consider the future of LLMs. One potential pattern that may emerge is increased competition at the feature and ease-of-use levels, leading to a level of commoditization similar to what has been seen in other technology domains. While there may be differences among LLM options, they could become broadly interchangeable.

In conclusion, the future of LLMs is uncertain, but their progress is closely tied to AI innovation. Developers need to stay informed and adapt to the changing landscape of these models.