**The Growing Threat of Deepfakes**

**The Growing Threat of Deepfakes**

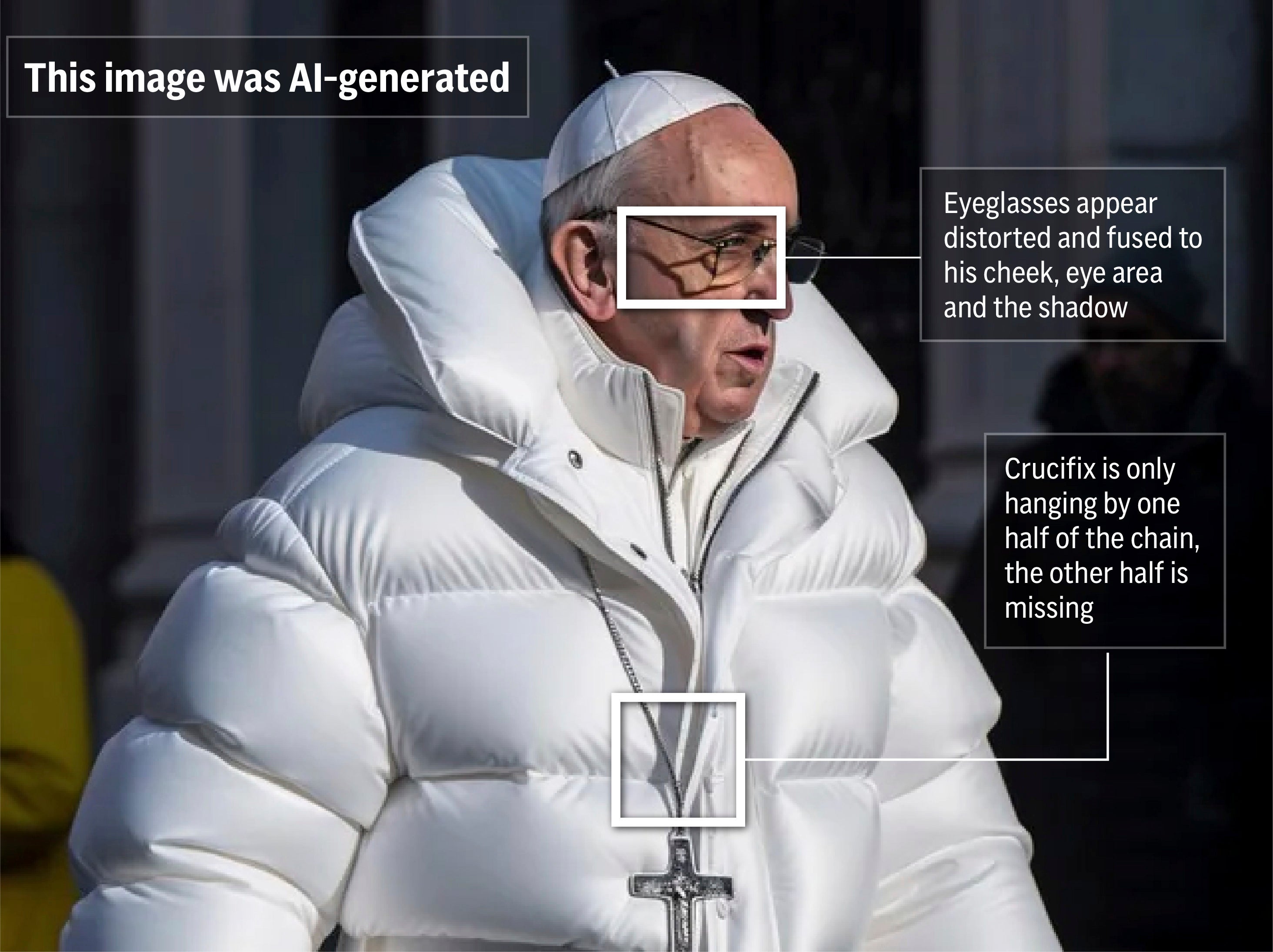

Deepfakes, a form of adversarial AI, are rapidly becoming one of the most prevalent and dangerous types of AI attacks. According to statistics from Deloitte, losses related to deepfakes are projected to reach $40 billion by 2027, growing at an astonishing compound annual growth rate of 32%. The banking and financial services industry is particularly vulnerable to these attacks. In 2023 alone, deepfake incidents increased by 3,000%, and it is predicted that there will be a further 50% to 60% increase in such incidents in 2024, with an estimated 140,000 to 150,000 cases globally. The latest generation of generative AI tools and platforms has made it easier for attackers to create deepfake videos, impersonated voices, and fraudulent documents at a low cost. This has resulted in deepfake fraud costing contact centers an estimated $5 billion annually.

**The Rise of AI-Powered Fraud**

The rapid growth of deepfakes is part of a larger trend in AI-powered fraud. Bloomberg reported that there is already a thriving underground market on the dark web that sells scamming software for as little as $20 or as much as thousands of dollars. Sumsub’s Identity Fraud Report for 2023 further highlights the global increase in AI-powered fraud. These alarming statistics demonstrate the urgent need for enterprises to address the risks associated with adversarial AI attacks.

**Enterprises Unprepared for Deepfakes**

Unfortunately, many enterprises are unprepared to defend against deepfakes and other forms of adversarial AI. Ivanti’s 2024 State of Cybersecurity Report reveals that one in three enterprises do not have a strategy in place to identify and defend against such attacks. This lack of preparation is concerning, especially considering that 74% of surveyed enterprises have already experienced AI-powered threats, with 89% believing that these threats are only just beginning. Additionally, 60% of CISOs, CIOs, and IT leaders express fear that their organizations are ill-equipped to defend against AI-powered threats. Deepfakes are increasingly being used as part of orchestrated strategies that include phishing, software vulnerabilities, ransomware, and API-related vulnerabilities, making them even more dangerous.

**Targeting CEOs**

One specific area of concern is the targeting of CEOs through deepfake attacks. VentureBeat has received reports from cybersecurity CEOs who prefer to remain anonymous, highlighting how deepfakes have evolved from easily identifiable fakes to videos that are almost indistinguishable from reality. CEOs are particularly vulnerable to these attacks, as they can be used to defraud companies of millions of dollars. Nation-states and large-scale cybercriminal organizations are heavily investing in generative adversarial network (GAN) technologies, further exacerbating the threat. CrowdStrike CEO George Kurtz emphasizes the sophistication of deepfake attackers, with thousands of attempts occurring this year alone. In a recent interview, Kurtz expressed concern about the ease with which deepfake technology can create false narratives that manipulate people’s behavior and actions.

**The Need for Action**

Enterprises must take immediate action to defend against deepfakes and other adversarial AI attacks. The Department of Homeland Security has recognized the increasing threat of deepfake identities and has issued a guide to help organizations mitigate this risk. To stay at parity with attackers, enterprises need to invest in advanced AI and machine learning technologies. It is crucial for organizations to develop strategies for identifying and defending against adversarial AI attacks, with a particular focus on deepfakes. By doing so, enterprises can protect themselves from significant financial losses and reputational damage.